Kakao Unveils “Kanana Safeguard,” a Guardrail Model for AI Safety Verification—First Open-Source Release by a Korean Company

- AI guardrail model development for verifying safety and reliability of generative AI services; three models released as open source on Hugging Face

- Built on Kakao’s proprietary Kanana language model, optimized for Korean language and culture

- Released under a commercial-use license to help build a safer AI ecosystem

[May 27, 2025] Kakao is taking proactive steps to establish a safe and trustworthy environment for generative AI technologies and services.

Kakao (CEO Shina Chung) announced on May 27 that it has developed “Kanana Safeguard,” a suite of AI guardrail models designed to assess the safety and reliability of AI-generated content. Kakao is the first among Korean companies to release three models as open source to contribute to the AI ecosystem.

The recent boom of various generative AI services has led to concerns around the risks of harmful content. Recognizing the urgent need for technical and regulatory safeguards, Kakao developed Kanana Safeguard as a foundational tool to help detect and prevent such risks. While many global tech companies have implemented specialized models to flag risky outputs, Kanana Safeguard stands out for its Korean language expertise.

It is built on Kakao’s proprietary Kanana language model and trained using a custom dataset designed to reflect Korean linguistic and cultural contexts, resulting in specialized performance tailored to Korean. When evaluated using the F1 Score—a standard metric assessing the precision and recall of AI models—Kanana Safeguard demonstrated performance exceeding that of leading global models in Korean-language safety tasks.

The three models released as open source are each optimized for detecting different types of risks: Kanana Safeguard detects harmful content in user prompts or AI responses, including hate speech, harassment, and sexually explicit material; Kanana Safeguard-Siren flags requests that may raise legal concerns, such as those involving personal data or intellectual property; and Kanana Safeguard-Prompt detects adversarial prompts from users attempting to exploit or manipulate AI systems.

Available for download on Hugging Face, all three models are distributed under the Apache 2.0 license, allowing unrestricted commercial use, modification, and redistribution. Kakao plans to advance the model through consistent updates.

Kakao AI Safety Lead Kyung-hoon Kim commented, “Since the emergence of generative AI, AI ethics and safety stand in the spotlight both in Korea and abroad. We will initiate a preemptive response to responsible AI development by raising awareness and developing technologies that incorporate social values.” (E.O.D.)

[Reference]

* Kanana Safeguard’s open-source models on Hugging Face: https://huggingface.co/collections/kakaocorp/kanana-safeguard-68215a02570de0e4d0c41eec

* Official Kakao Tech blog: “Introducing the Kanana Safeguard series, the guardrail models of Kakao”: https://tech.kakao.com/posts/705

- Press Release Kakao Strengthens Partnership with Google, for Service Innovation Through AI-powered Android Technology

#kakao#Google#partnership

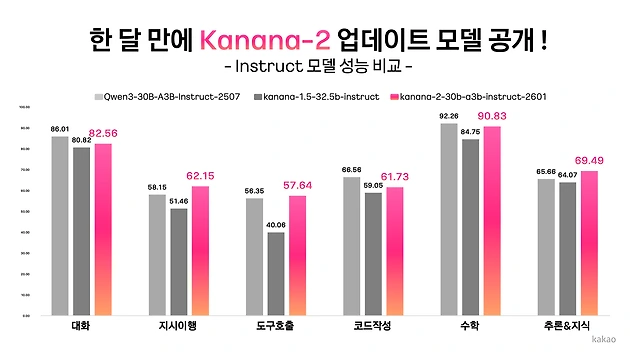

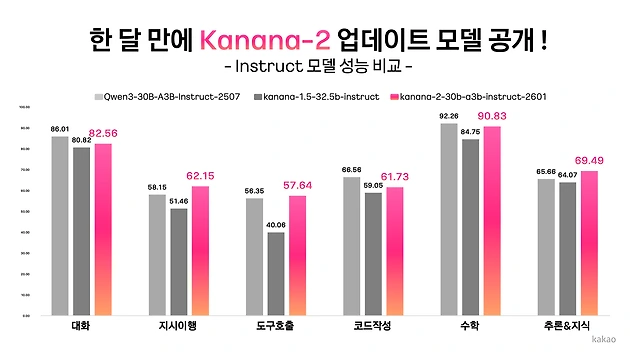

#kakao#Google#partnership - Press Release Kakao Releases Four Additional Open-Source Versions of the Upgraded “Kanana-2” Model

#kakao#Kanana

#kakao#Kanana - Press Release Kakao Hits All-Time High Annual Performance in 2025—KRW 8.09 Trillion in Revenue, KRW 732 Billion in Operating Profit

#kakao#profit#Q4 2025

#kakao#profit#Q4 2025