Kakao Releases One of the World’s Highest-Performing Multimodal Language Model and Becomes First in Korea to Launch Open Source MoE Model

- Two months since release of four language models in May, proving Kakao’s proprietary model technology development capabilities

- Kakao’s participation in the “Proprietary AI Foundation Model Project” based on its proprietary model development capabilities and service operation experience

- A multimodal language model expanded from Kanana 1.5, developed from scratch—high performance compared to domestic and international models

- First domestic open source release of the “MoE model,” which excels in efficient computing resource utilization and cost savings

- Continuous release of self-developed high-performance models, contributing to strengthening the self-reliance and technological competitiveness of the domestic AI ecosystem

[July 24, 2025] Kakao has once again demonstrated its AI technology development capabilities by releasing the highest-performing lightweight multimodal language model and mixture-of-experts (MoE) model among publicly available models in Korea as open source for the first time in the country.

Kakao (CEO Shina Chung) announced on July 24 through Hugging Face that the company has released the lightweight multimodal language model “Kanana-1.5-v-3b” with image information understanding and instruction execution capabilities, along with the MoE language model “Kanana-1.5-15.7b-a3b” as open source.

Following the release of four Kanana-1.5 language models in May, Kakao has once again demonstrated its technical prowess based on its proprietary model design capabilities by releasing additional models as open source models just two months later. Participating in the Ministry of Science and ICT’s Proprietary AI Foundation Model Project, Kakao will contribute to enhancing AI accessibility for all citizens and elevating the nation’s competitive edge in AI by leveraging its self-developed model capabilities and experience in operating large-scale services, such as KakaoTalk.

# A lightweight multimodal language model that responds in natural language when given image or text input—expanding on Kanana 1.5 LLM, a proprietary model developed via a “from-scratch” approach

As a multimodal language model capable of processing image data as well as text, Kanana-1.5-v-3b is founded on the Kanana 1.5 model, released as open source in late May. Kanana 1.5 was developed using the “from-scratch” approach, built entirely on Kakao’s proprietary technology from the initial development stage to the final stage.

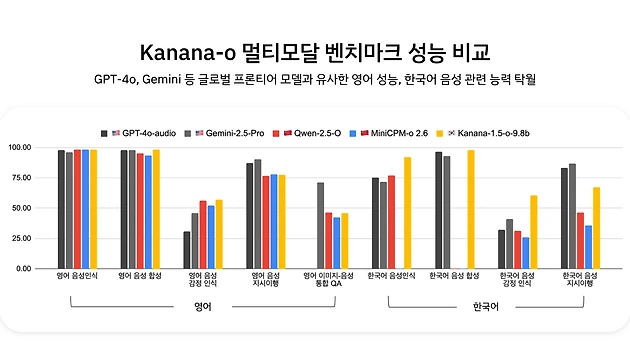

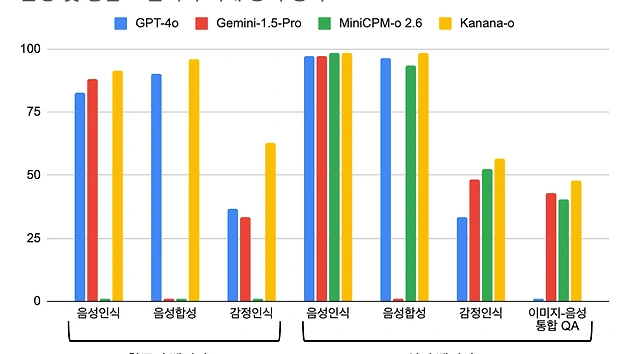

The multimodal language model “Kanana-1.5-v-3b” features exceptional instruction-following performance, accurately understanding the intent of the user’s question, as well as excellent Korean and English image understanding capabilities. Despite being a lightweight model, its ability to understand Korean and English documents expressed as images is comparable to that of the global multimodal language model GPT-4o.

The model also achieved the highest score when compared to similar-sized domestic and international public models in Korean benchmarks, and showed similar performance levels when compared to overseas open source public models in various English benchmarks. In the instruction following ability benchmark, it achieved 128% of the performance of similar-sized multimodal language models publicly available in Korea.

Kakao maximized the performance of Kanana-1.5-v-3b through human preference alignment training and knowledge distillation. Knowledge distillation is a method of training a relatively small “student” model from a high-performance large “teacher” model. This technology enables smaller models to acquire more sophisticated and generalized prediction capabilities by incorporating the large model’s prediction probability distribution and the answer itself into the learning process. In turn, the smaller model can reach performance levels close to—or even beyond—those of large models in terms of capabilities such as precision and language comprehension, despite its lightweight structure.

Based on the strengths of lightweight multimodal language models, Kanana-1.5-v-3b can be flexibly utilized in various fields, including recognition of images and letters, creation of fairy tales and poems, recognition of domestic cultural heritage and tourist attractions, chart understanding, and math problem solving. For instance, when you ask the model, “Give me a brief description of the location where this photo was taken,” it provides an accurate response, such as, “The background of this photograph is the Cheonggyecheon Stream in Seoul,” based on its high recognition of regions in Korea. The model can be effective in fields requiring real-time performance and efficiency, such as image search and content classification.

Taking an extra step beyond focusing on the model’s performance, Kakao now concentrates on developing AI with multimodal understanding capabilities, user instruction execution capabilities, and reasoning abilities that unlock the potential to reason and behave like humans. In the second half of the year, Kakao plans to disclose the results of its reasoning model, which is essential for implementing agent-type AI.

# Harbinger of a new trend in AI model development—incorporating cost-efficiency as well as performance through innovative MoE model architecture

On the same day, Kakao also released an open source language model with an MoE structure, which is a clear distinction from the conventional “dense” model.

Unlike existing models where all parameters participate in computations during input data processing, the MoE structure activates only certain expert models optimized for specific tasks, offering efficient computing resource utilization and cost savings as its strengths. Thanks to these advantages, it has become a trend in AI model development in the global market.

The Kanana-1.5-15.7b-a3b model, which applies the MoE architecture, operates with only about 3B parameters activated out of a total of 15.7B parameters during inference. Kakao developed its 3B-scale model Kanana-Nano-1.5-3B by upcycling to save on model training time and costs. Upcycling is a method of replicating existing multi-layer perceptron (MLP) layers and converting them into multiple expert layers, which is more efficient than developing a model from scratch. Although only 3B parameters are activated, the performance is equivalent to or exceeds that of Kanana-1.5-8B.

Kakao’s MoE model can provide practical assistance to companies and researchers seeking to build high-performance AI infrastructure at low cost. In particular, its structural characteristics, which use only limited parameters during inference, make it advantageous for implementing low-cost, high-efficiency services, thereby increasing its utility.

Through the open source release of these models, Kakao plans to set a new standard in the AI model ecosystem and lay the foundation for more researchers and developers to harness efficient and powerful AI technology. Kakao will also contribute to strengthening the independence and technological competitiveness of the domestic AI ecosystem by continuously upgrading its own technology-based models and challenging the development of ultra-large models at the global flagship level through model scaling.

Kakao Kanana Performance Lead Byung-hak Kim stated, “This open source release is a milestone in terms of cost efficiency and performance. We have met the two goals of service application and technological independence, going beyond the simple advancement of model architecture.”

Last year, Kakao unveiled the lineup of its self-developed AI model “Kanana,” and has been sharing the performance and development processes of various models. In May, Kakao released four Kanana language models with significantly improved performance, following the open source release of its self-developed model in late February. Notably, to contribute to the domestic AI ecosystem, the company allowed commercial use by applying the Apache 2.0 license, providing researchers and startups with the foundation to freely experiment with and deploy domestically developed large language models (LLMs). (E.O.D.)

[Reference]

* Open source release of Kanana models on Hugging Face: https://huggingface.co/kakaocorp

* Official Kakao Tech blog posts

1) Development of Kanana-1.5-v-3b: https://tech.kakao.com/posts/714

2) Development of Kanana-MoE model: https://tech.kakao.com/posts/716

- Press Release 발행일 2025.12.12 Kakao Unveils Performance of Two Advanced Multimodal Language Models

#kakao#Multimodal Language Model

#kakao#Multimodal Language Model - Press Release 발행일 2025.05.01 Kakao Unveils Performance of “Kanana-o,” Korea’s First Unified Multimodal Language Model Integrating Text, Voice, and Image

#kakao#Kanana#Multimodal Language Model

#kakao#Kanana#Multimodal Language Model