Kakao Unveils Performance of Two Advanced Multimodal Language Models

- Further upgrades to the integrated multimodal language model Kanana-o, which simultaneously understands and responds to text, voice, and images, with enhanced instruction-following capabilities

- Proprietary datasets enable a wide range of tasks, while improved situational emotion recognition and expression achieve top-tier results in Korean speech benchmarks

- Revealing the performance of Kanana-v-embedding, a core technology for image-based search, which combines language model reasoning with advanced search capabilities and is already in use across Kakao’s internal services

- Ongoing research into lightweight multimodal models optimized for on-device environments, alongside plans to develop the high-performance, high-efficiency Kanana-2 within the year, advancing toward truly human-like AI interaction

[December 12, 2025] Kakao has unveiled the latest research achievements behind its advanced multimodal AI technologies that see, hear, and speak like humans, with a deep understanding of the Korean language and cultural context.

On December 12, Kakao (CEO Shina Chung) shared through its tech blog the development process and performance results of two proprietary AI models: Kanana-o, an integrated multimodal language model optimized for Korean contexts, and Kanana-v-embedding, a multimodal embedding model. Through this disclosure, Kakao once again demonstrated the technological maturity and competitiveness of its in-house AI capabilities.

Kanana-o is an integrated multimodal language model that understands text, speech, and images simultaneously and delivers real-time responses. First introduced in May, the model stands out for its exceptional understanding of Korean linguistic nuances compared to global models, as well as for its natural, expressive conversational abilities.

Kakao pinpointed a limitation in existing multimodal models: while they perform strongly with text input, their responses often become simplistic and less capable of reasoning in voice-based conversations. To address this issue, Kakao significantly enhanced Kanana-o’s instruction-following capabilities, allowing the model to grasp implicit user intent and handle complex requests more effectively. By training the model on proprietary datasets, Kakao ensured robust performance across diverse multimodal inputs and outputs. Beyond basic question-and-answer tasks, Kanana-o now excels in summarization, emotion and intent interpretation, error correction, format transformation, and translation.

In addition, the application of high-quality speech data and Direct Preference Optimization (DPO) techniques allowed the model to learn subtle nuances in intonation, emotion, and breathing. As a result, Kanana-o can express vivid emotions such as joy, sadness, anger, and fear, as well as more delicate emotional variations driven by changes in tone and timbre. Kakao also built a podcast-style dataset featuring natural exchanges between hosts and guests, enabling smooth, uninterrupted multi-turn conversations.

Benchmark evaluations show that Kanana-o delivers English speech performance comparable to GPT-4o, while achieving markedly superior results in Korean speech recognition, synthesis, and emotion recognition. Looking ahead, Kakao plans to further evolve the model toward natural, full-duplex conversations and the real-time generation of context-aware soundscapes.

Kakao also revealed Kanana-v-embedding, a core technology for image-based search and a multimodal model tailored to Korean language and cultural contexts, capable of processing text and images simultaneously. The model supports text-to-image search, contextual searches based on selected images, and document searches that include images.

Designed with real-world service deployment in mind, Kanana-v-embedding demonstrates an outstanding understanding of the Korean language and culture. It accurately retrieves images for proper nouns such as “Gyeongbokgung” or “bungeoppang,” and even correctly interprets misspelled terms like “Hamelton cheese” by understanding context. The model also excels at handling complex queries, such as “a group photo taken wearing hanbok,” filtering out images that only partially meet the specified conditions.

Currently, Kanana-v-embedding has been applied to Kakao’s internal services to analyze and review similarities among advertising creatives. The company plans to expand the model to video and audio domains, broadening its deployment across a wider range of services.

Meanwhile, building on Kanana-1.5, introduced in May with a focus on enabling Agentic AI, Kakao is actively researching lightweight multimodal models that can operate in on-device environments, such as mobile devices. In tandem with these efforts, the company is preparing to develop Kanana-2, a high-performance, high-efficiency model based on a Mixture of Experts (MoE) architecture.

Kakao Kanana Performance Lead Byung-hak Kim stated, “With Kakao’s in-house AI model, Kanana, we dream of going beyond simply delivering information. The model will evolve into an AI that understands users’ emotions and communicates in a familiar and natural way by deepening its grasp of the Korean context and expression. By embedding AI technologies into real service environments, we will continue to create meaningful AI experiences in users’ everyday lives and focus on realizing AI that can truly interact like a human.” (E.O.D.)

[Reference]

* Official Kakao Tech blog posts

Evolution of Kanana-o: https://tech.kakao.com/posts/802

Development journal of Kakao’s multimodal embedding model: https://tech.kakao.com/posts/801

- Press Release 발행일 2026.02.12 Kakao Hits All-Time High Annual Performance in 2025—KRW 8.09 Trillion in Revenue, KRW 732 Billion in Operating Profit

#kakao#profit#Q4 2025

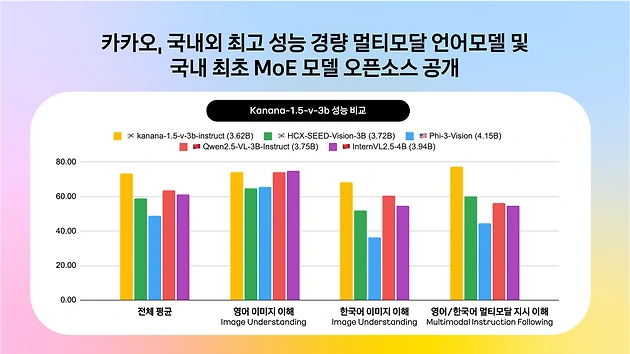

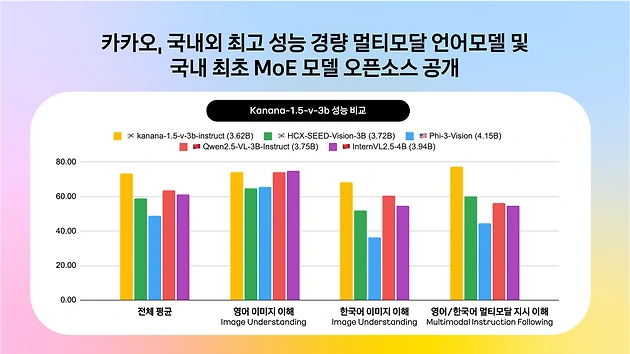

#kakao#profit#Q4 2025 - Press Release 발행일 2025.07.24 Kakao Releases One of the World’s Highest-Performing Multimodal Language Model and Becomes First in Korea to Launch Open Source MoE Model

#kakao#Multimodal Language Model#MoE Model

#kakao#Multimodal Language Model#MoE Model - Press Release 발행일 2026.02.12 Kakao Strengthens Partnership with Google, for Service Innovation Through AI-powered Android Technology

#kakao#Google#partnership

#kakao#Google#partnership