Kakao’s Open-Source Release of “Kanana-2,” a Model Optimized for Agentic AI Implementation

- Three newly improved high-performance, high-efficiency models released on Hugging Face, including the company’s first open-source reasoning model

- Focus on enhancing tool-calling and instruction-following capabilities for agentic AI

- MLA and MoE techniques reduce computational costs and improve response speed, achieving performance comparable to the latest models with similar architectures

- Plans to develop models tailored for advanced AI agent scenarios and continue advancing lightweight on-device models

[December 19, 2025] Kakao (CEO Shina Chung) announced that it has open-sourced its next-generation proprietary language model Kanana-2 on Hugging Face, showcasing its technological competitiveness in building high-performance, high-efficiency models optimized for agentic AI.

Since unveiling its original in-house AI model lineup Kanana last year, Kakao has steadily expanded its open-source offerings from lightweight models to Kanana-1.5, which specializes in solving complex tasks. The newly released Kanana-2 is a major leap forward in both performance and efficiency. It is designed to function more like an AI “collaborator,” capable of understanding the context of user commands and acting proactively.

The release includes three models: ▲a Base model; ▲an Instruct model fine-tuned for stronger instruction-following performance, and; ▲a newly introduced Thinking model specializing in reasoning tasks. In particular, Kakao has made all training-stage weights publicly available, enabling developers to fine-tune the models freely using their own datasets.

Kanana-2 delivers significant improvements in tool-calling and instruction-following capabilities as two core requirements for agentic AI. Multi-turn tool-calling performance has improved by more than threefold compared to the previous model (Kanana-1.5-32.5B), allowing the model to understand and execute complex, step-by-step user requests better. Language support has also expanded from Korean and English to six languages: Korean, English, Japanese, Chinese, Thai, and Vietnamese.

From a technical standpoint, Kakao adopted advanced architectural innovations to maximize efficiency. The model incorporates Multi-head Latent Attention (MLA) to process long inputs more efficiently, along with a Mixture of Experts (MoE) architecture that activates only the necessary parameters during inference. This enables efficient handling of long-context inputs with lower memory use, reduced computational costs, and improved response speed. The model also demonstrates strong high-throughput performance, enabling it to handle large volumes of simultaneous requests quickly.

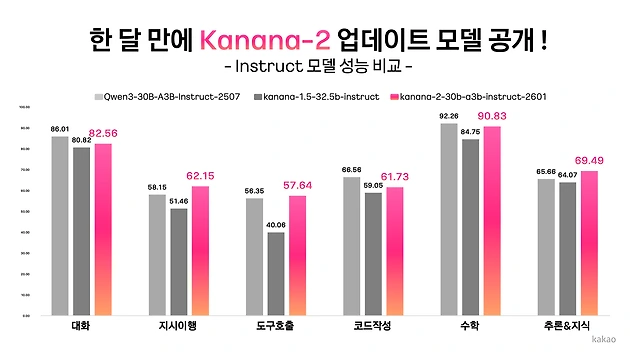

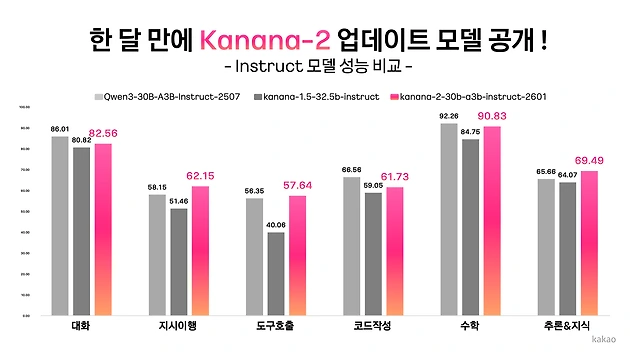

In terms of performance benchmarks, the model also proved its competitive edge at a global level. The instruct model achieves performance that is on a par with Qwen3-30B-A3B, one of the latest models with similar architecture. It was also previewed to participants in an AI Agent Competition co-hosted by Kakao and Korean Institute of Information Scientists and Engineers earlier this month, demonstrating strong practical usability in real-world agent development environments. The thinking-specialized model likewise showed performance comparable to Qwen3-30B-A3B in Thinking Mode across benchmarks requiring advanced reasoning capabilities, highlighting its potential as a reasoning-focused AI.

Looking ahead, Kakao plans to scale up model sizes based on the same MoE architecture and enhance high-level instruction-following abilities further. The company will also continue developing models tailored for complex AI agent scenarios and further advancing lightweight on-device models.

“The foundation of innovative AI services lies in the performance and efficiency of the underlying language model. Beyond focusing solely on high performance, we aim to develop practical AI models that can be effectively deployed in real-world services, and to continue sharing them as open source to contribute to the growth of the global AI research ecosystem,” said Byung-hak Kim, Performance Lead of Kanana at Kakao. (E.O.D.)

[Reference]

* Open source release of the Kanana-2.0 model on Hugging Face:

https://huggingface.co/collections/kakaocorp/kanana-2

* Kanana-2.0 tech blog

- Press Release 발행일 2026.01.23 Reforming the CA Consultative Body Structure: Focus on Streamlined Organization and Stronger Execution

#kakao

#kakao - Press Release 발행일 2026.01.20 Kakao Releases Four Additional Open-Source Versions of the Upgraded “Kanana-2” Model

#kakao#Kanana

#kakao#Kanana - Press Release 발행일 2026.01.08 Shina Chung Meets Kakao Group’s New Entry-Level Crew, Presents Growth Roadmap for the AI Era

#kakao

#kakao